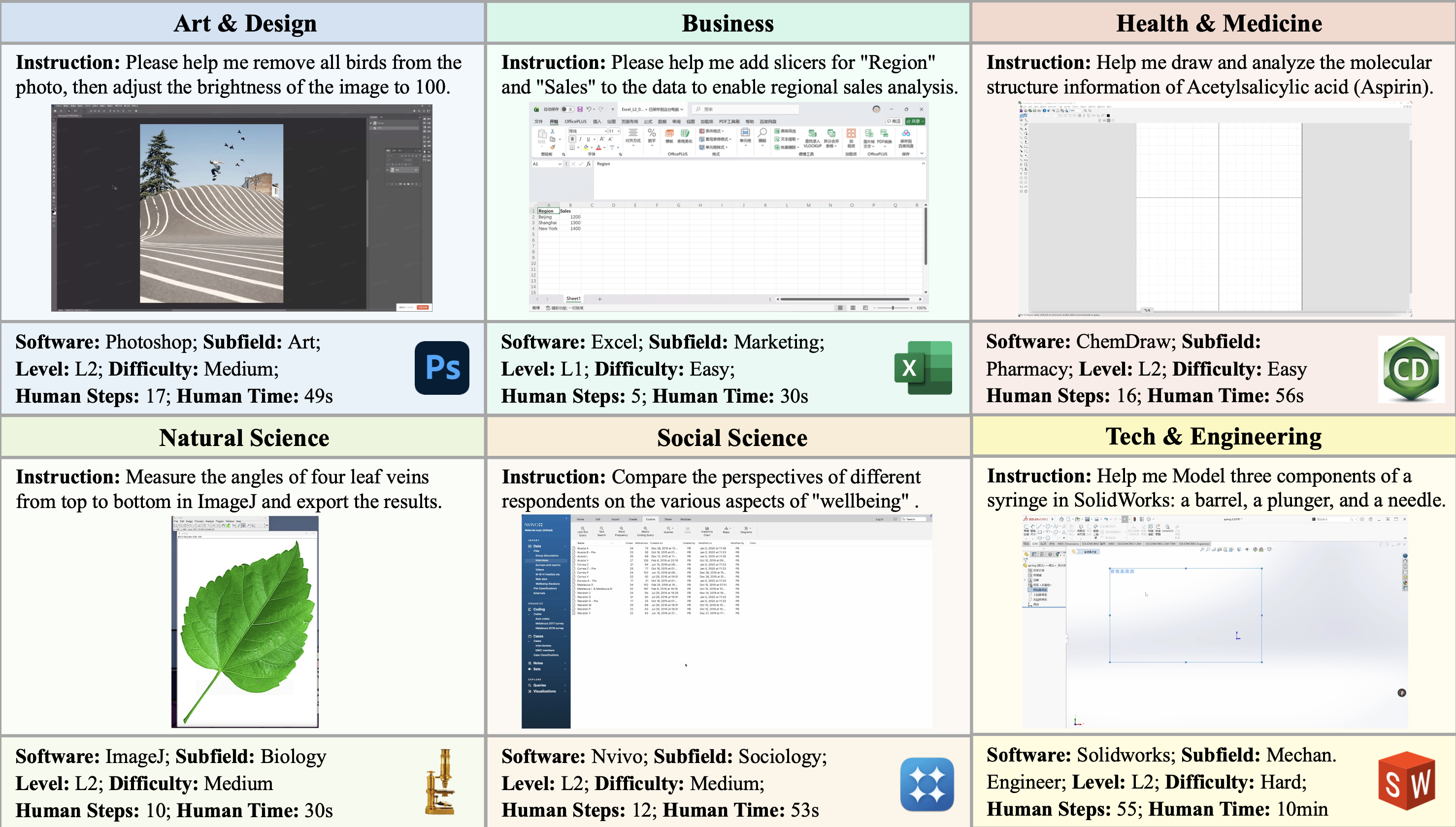

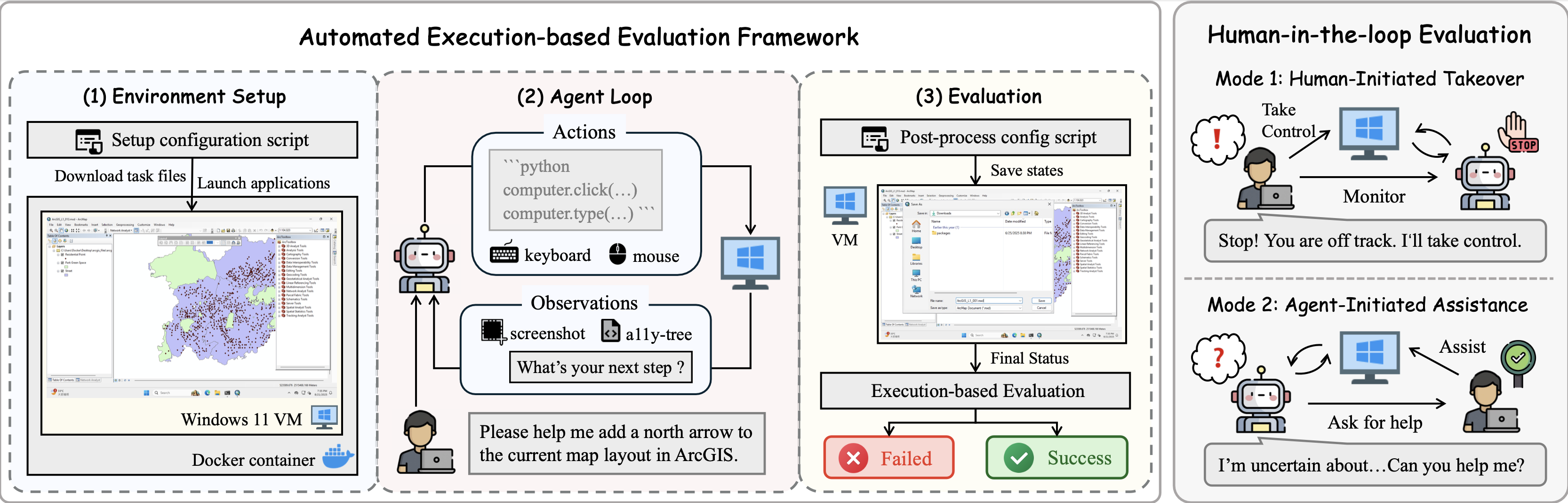

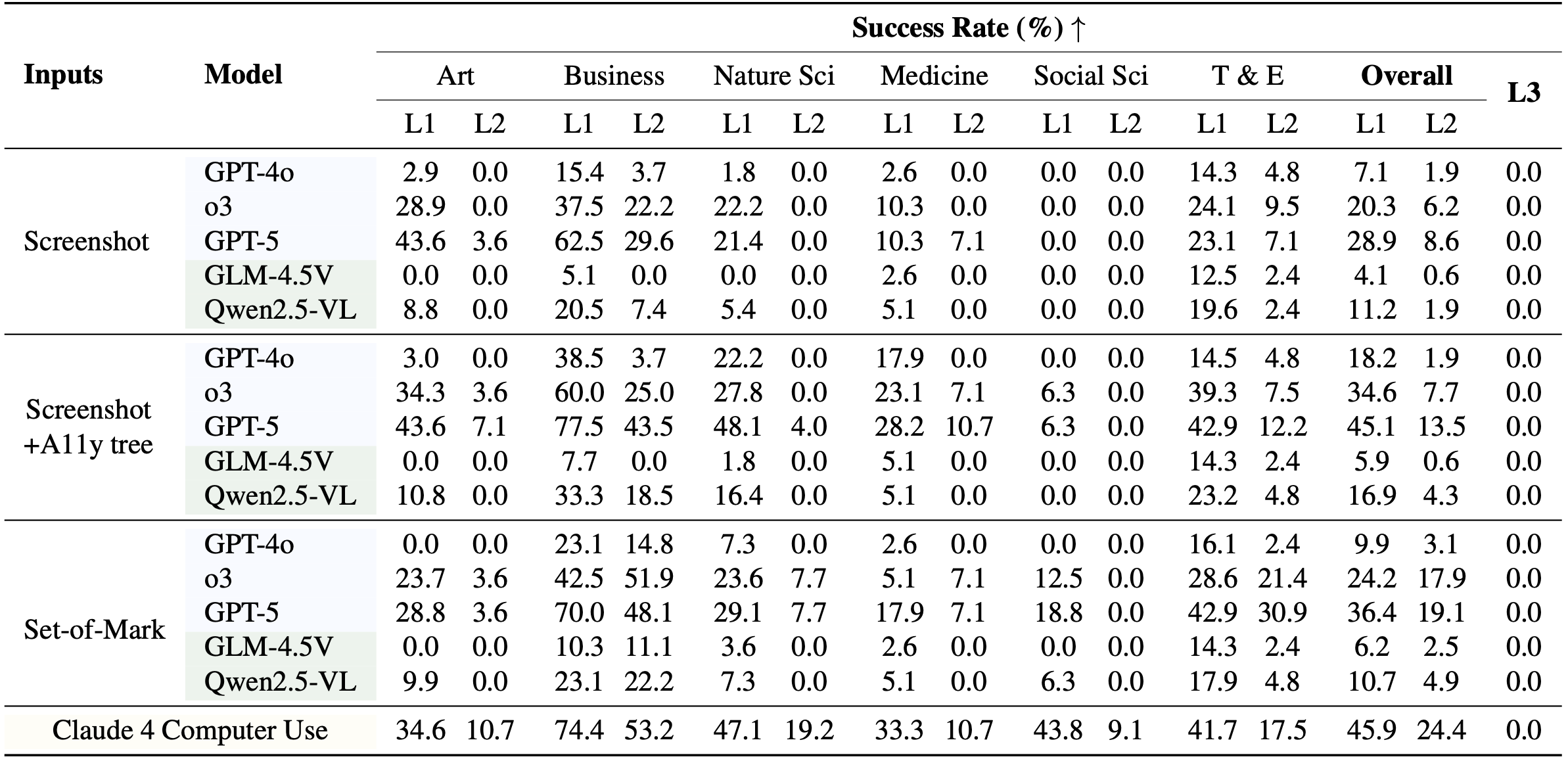

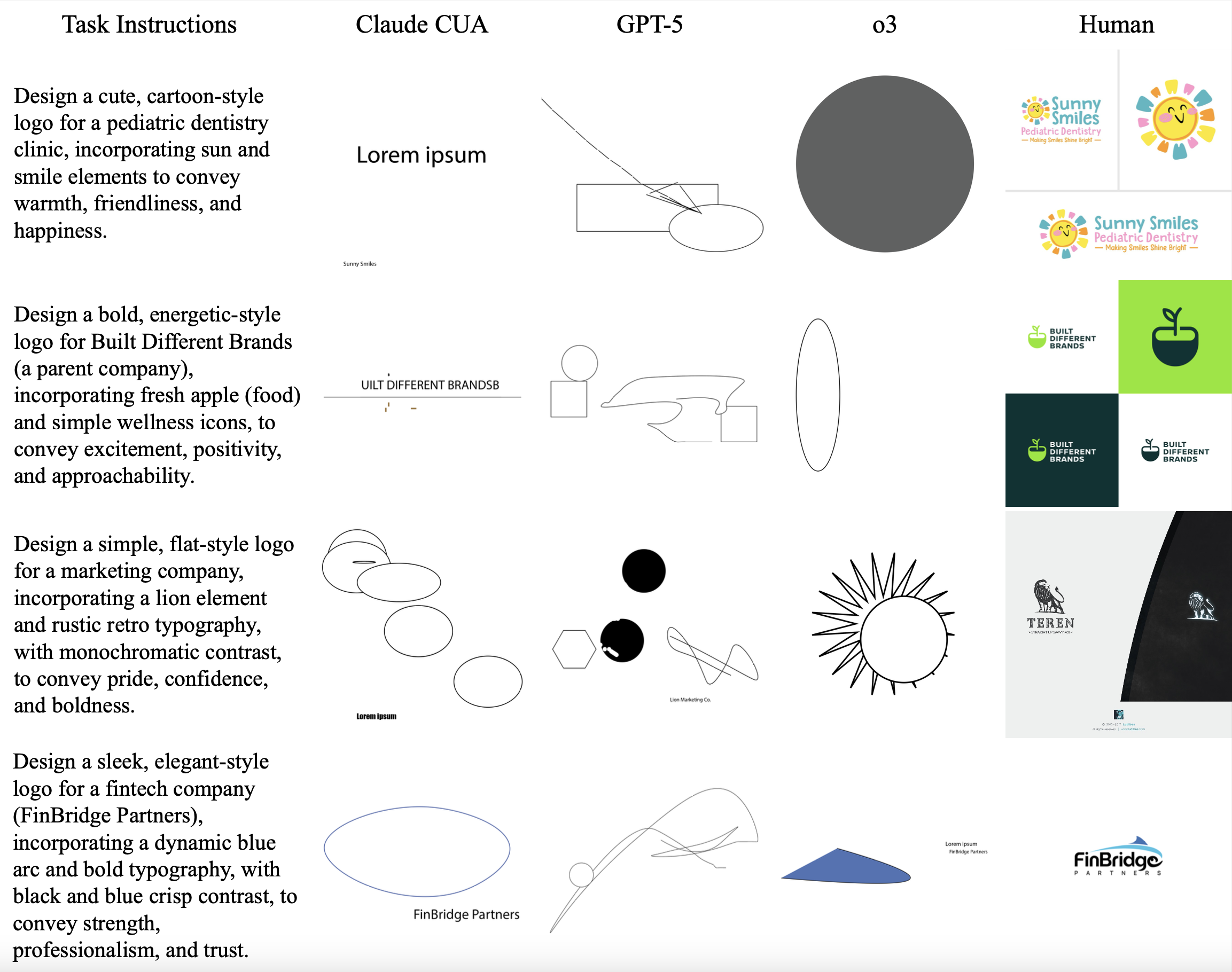

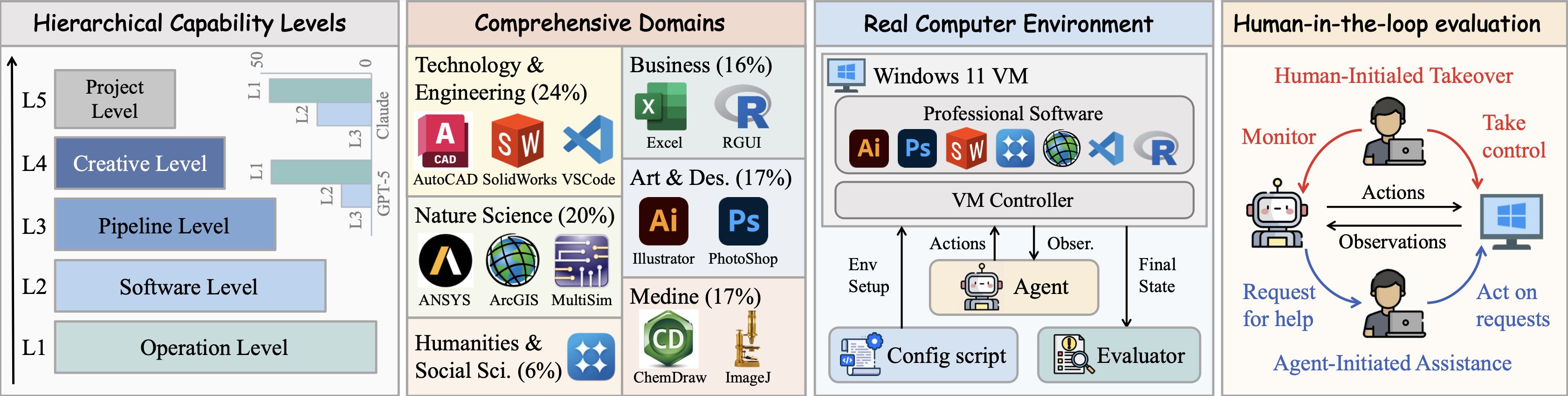

🌈 ProSoftArena. We establish the first hierarchical taxonomy of agent capabilities in professional software environ- ments; and curate a comprehensive benchmark covering 6 disciplines, 20 subfields and 13 core professional applications. We construct a VM-based real computer environment for reproductible evaluations, and uniquely incorporates a human-in-the-loop evalution paradigm.